Say you're a senior baker in a large pharmaceutical, tasked to ship a new medicine

drug for suppressing symptoms of any of the growingly lucrative health conditions of the developed world.

Among simple paper-pushing and the more menial tasks, your drug development pipeline involves figuring

out the correct, for business reasons quite optimal, combination to hundreds of parameters, such as:

ratios of active ingredients,

bulking agents,

centrifugation times,

pH levels,

temperature profiles at each processing step,

choice of solvents and purification steps,

ideal dosage forms,

whether to include any of the other common processes of your establishment,

and so on.

The problem is: because some processes can't be skipped or sped up, every combination you decide to try takes two of your assistant researchers nearly two weeks of laboratory work. But your new flagship drug is expected due next Thursday ...

It is easy to get a thousand prescriptions, but hard to get one single remedy.

— Chinese proverb

Thank god for SAMBO, Rambo of global optimization. It gets in and then it finds minimums of the objective criteria function quickly and efficiently, in least number of evaluations. SAMBO stands for Sequential and Model-Based Optimization. This simple optimization package consists of the following items, each with its own neat, user-friendly, Pythonic interface:

- function

sambo.minimize()to drive constrained and bounded global black-box optimization, design-space exploration and model calibration, modeled after well-known Python packages SciPy and scikit-optimize,1 supporting SOTA optimization algorithms like SHGO,2 SMBO3 and SCE-UA,4 -

class

Optimizerthat provides an ask-and-tell interface, additionally supporting sequential surrogate models produced by estimators like those of scikit-learn, skorch or Keras, with popular algorithms including Gaussian process and tree-based regression built in, -

SamboSearchCV,a faster drop-in replacement forGridSearchCV,RandomizedSearchCVand similar methods of hyperparameter tuning in complex ML pipelines.

See below examples for usage.

- 1 scikit-optimize/scikit-optimize. DOI: 10.5281/zenodo.1157319

- 2 SHGO: Simplicial homology global optimization. DOI: 10.1007/s10898-018-0645-y

- 3 SMBO: Sequential Model-Based Optimization for General Algorithm Configuration. DOI: 10.1007/978-3-642-25566-3 40

- 4 SCE-UA: Effective and efficient global optimization for conceptual rainfall-runoff models. DOI: 10.1029/91WR02985

-

Compatible with Python 3+

Python 3.10+ —Best choice for new and forward-looking projects.

-

Small, clean API

The API reference follows established idiomatic Python doctrine and is easy to wrap one's head around.

-

Out-of-the-box desired behavior

Like Rambo, SAMBO works alone and produces correct results at least 99% of the time.

-

Minimal dependencies

Needs just NumPy/SciPy, optionally uses scikit-learn and Matplotlib.

-

Blazing fast execution

On top of that, fewest objective function evaluations or your money back! Benchmark to prove it.

-

Black-box Bayesian optimization

State-of-the-art optimization techniques painlessly at your disposal.

-

Integral, floating and nominal dimensions

Optimize any kind of input variables including integers, real-valued variables and ordinals/categoricals.

-

Approximate, but converging

Stochastic processes converge to the correct optimum. Error tolerance for you to decide.

-

Beautiful Matplotlib charts

Let you visualize exactly what the algorithms are doing. Reproducible research.

Download

Usage Examples

Use case №1: Find global minimium of an objective/cost function

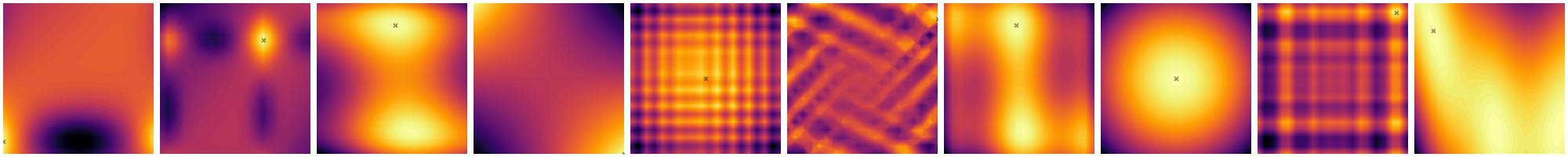

We quickly find the global optimum of an example 2D Rosenbrock's banana function, constrained to a circle with , all in comparatively just a few evaluations.

While this is a simple 2D example, partial-dependence plots and sequence-of-evaluations plots generalize well to several dimensions.

import sambo

from sambo.plot import *

# Extras

import matplotlib.pyplot as plt

from scipy.optimize import rosen

result = sambo.minimize(

rosen, bounds=[(-2., 2.)] * 2, # Mind the dots

constraints=lambda x: sum(x**2) <= 2**len(x),

max_iter=50, method='shgo')

plot_convergence(result)

plot_objective(result) # Partial dependence

plot_evaluations(result) # Sequence of evaluations

plot_regret(result)

plt.show()

<class 'sambo.OptimizeResult'>

message: Optimization terminated successfully.

success: True

fun: 5.205243704996618e-08

x: [ 9.998e-01 9.996e-01]

nfev: 68

xv: [[ 0.000e+00 0.000e+00]

[ 0.000e+00 0.000e+00]

...

[ 9.998e-01 9.996e-01]

[ 9.998e-01 9.996e-01]]

funv: [ 1.000e+00 ... 5.210e-08]Use case №2: Sequential surrogate model-based "Ask-and-Tell" optimization

When your optimization objective is an external process, you may not be able to express it as a simple Python function. Instead, you may ask the optimizer process for the next suggested candidate point (solution candidate), execute the trial (e.g. the two-week "baking" process), then report back your findings (objective result ) to the optimizer for further consideration and refitting. We call this an "ask-and-tell" API.

The estimator= can be any object with a scikit-learn fit-predict API,

including neural networks and modern AI.

from sambo import Optimizer

def evaluate(x):

... # Abstract long and laborious process

return rosen(x)

bounds = [(-2., 2.)] * 4 # 4D

optimizer = Optimizer(

fun=None, # Implies Ask-And-Tell interface

bounds=bounds,

estimator='gp', # or bring your own

)

n_iter = 50

for i in range(n_iter):

suggested_x = optimizer.ask(1)

y = [evaluate(x) for x in suggested_x]

optimizer.tell(y)

best_x, best_fvals = optimizer.top_k()

# Continue the optimization ...

result: OptimizeResult = optimizer.run()

Use case №3: Hyperparameter tuning for machine-learning in quasi-logarithmic time

Use sambo.SamboSearchCV as a drop-in replacement for

GridSearchCV (or even HalvingRandomSearchCV) from scikit-learn

to optimize your machine learning pipeline hyperparameters in sub-linear time,

yet with an algorithm considerably better-informed than simple random search!

# Example setup of a scikit-learn pipeline

from sklearn.datasets import load_breast_cancer

from sklearn.tree import DecisionTreeClassifier

from sklearn.model_selection import GridSearchCV

X, y = load_breast_cancer(return_X_y=True)

clf = DecisionTreeClassifier()

param_grid = {

'max_depth': list(range(1, 30)),

'min_samples_split': [2, 5, 10, 20, 50, 100],

'min_samples_leaf': list(range(1, 20)),

'criterion': ['gini', 'entropy'],

'max_features': [None, 'sqrt', 'log2'],

}

search = GridSearchCV(clf, param_grid)

# Trying all ~20k combinations takes a long time ...

search.fit(X, y)

print(search.best_params_)

print(search.best_score_)

# Alternatively ...

from sambo import SamboSearchCV

search = SamboSearchCV(clf, param_grid, max_iter=100)

search.fit(X, y) # Fast, good enough

print(search.best_params_)

print(search.best_score_)

print(search.opt_result_)

{'criterion': 'gini',

'max_depth': 6,

'max_features': 'sqrt',

'min_samples_leaf': 1,

'min_samples_split': 5}

0.947269582406721

{'criterion': 'entropy',

'max_depth': 20,

'max_features': None,

'min_samples_leaf': 5,

'min_samples_split': 6}

0.9419940696812453

message: Reached n_iter_no_change (=10) w/o improvement

success: True

fun: -0.9419940696812453

x: [20 6 5 'entropy' None]

nit: 26

nfev: 77

xv: [[15 86 ... 'gini' None]

[1 57 ... 'entropy' 'sqrt']

...

[19 5 ... 'gini' None]

[20 8 ... 'gini' None]]

funv: [-9.244e-01 -9.034e-01 ... -9.139e-01 -9.191e-01]

model: [GaussianProcessRegressor()]Benchmark

It's 2020, and if you're still doing particle swarm, basin-hopping, Monte Carlo or genetic/evolutionary algorithms optimization, you're likely throwing away precious computing cycles, at large! According to published benchmark of most common optimization algorithm implementations on several popular global optimization functions, including some multi-dimensional ones (2–10D), SAMBO out-of-the-box most often converges to correct global optimum, in fewest total objective evaluations, yielding smallest absolute error, with runtime just as fast as that of the best (full stdout output), proving the implementation sound and justified.

| Method | Correct % | Evaluations | Error % | Duration |

|---|---|---|---|---|

| sambo.minimize(smbo) | 100% | 239 | 0 | 25.37 |

| sambo.minimize(sceua) | 100% | 551 | 0 | 0.08 |

| direct † | 100% | 1388 | 0 | 0.02 |

| dual_annealing † | 100% | 6462 | 0 | 0.27 |

| sambo.minimize(shgo) | 92% | 219 | 1 | 0.03 |

| differential_evolution | 92% | 13953 | 0 | 0.16 |

| scikit-optimize | 75% | 290 | 2 | 60.60 |

| Nelder-Mead † | 75% | 301 | 14 | 0.01 |

| Optuna | 75% | 360 | 2 | 2.51 |

| nevergrad | 75% | 1040 | 7 | 4.05 |

| COBYQA | 67% | 134 | 7 | 0.15 |

| COBYLA | 67% | 215 | 15 | 0.00 |

| shgo | 67% | 241 | 13 | 0.01 |

| SLSQP | 67% | 266 | 12 | 0.01 |

| Powell † | 67% | 323 | 16 | 0.00 |

| hyperopt | 67% | 998 | 2 | 9.39 |

| trust-constr | 67% | 1044 | 7 | 0.16 |

| TNC † | 58% | 233 | 16 | 0.01 |

| basinhopping | 58% | 3424 | 21 | 0.11 |

| CG † | 50% | 414 | 19 | 0.01 |

| † Non-constrained method; constrained by patching the objective s.t.: | ||||

∗ The following implementations were considered:

| ||||

Citation

If you find this package useful in your academic research, please consider citing:

@software{SAMBO,

author = {Kernc},

title = {SAMBO: Sequential and Model-Based Optimization: Efficient global optimization in Python},

url = {https://sambo-optimization.github.io},

doi = {https://doi.org/10.5281/zenodo.14461363},

year = {2024}

}What Users are Saying

The proof of [this] program's value is its existence.

A. Perlis

We are all tasked to balance and optimize ourselves.

M. Jemison

[...] When all else fails, read the instructions.

Cahn

You're bound to be unhappy if you optimize everything.

D. Knuth

After scikit-optimize went MIA, the release of this Bayesian optimization software package is just about optimally timed.

B. Kralz